You Launched 5,000 Pages. Google Indexed 47.

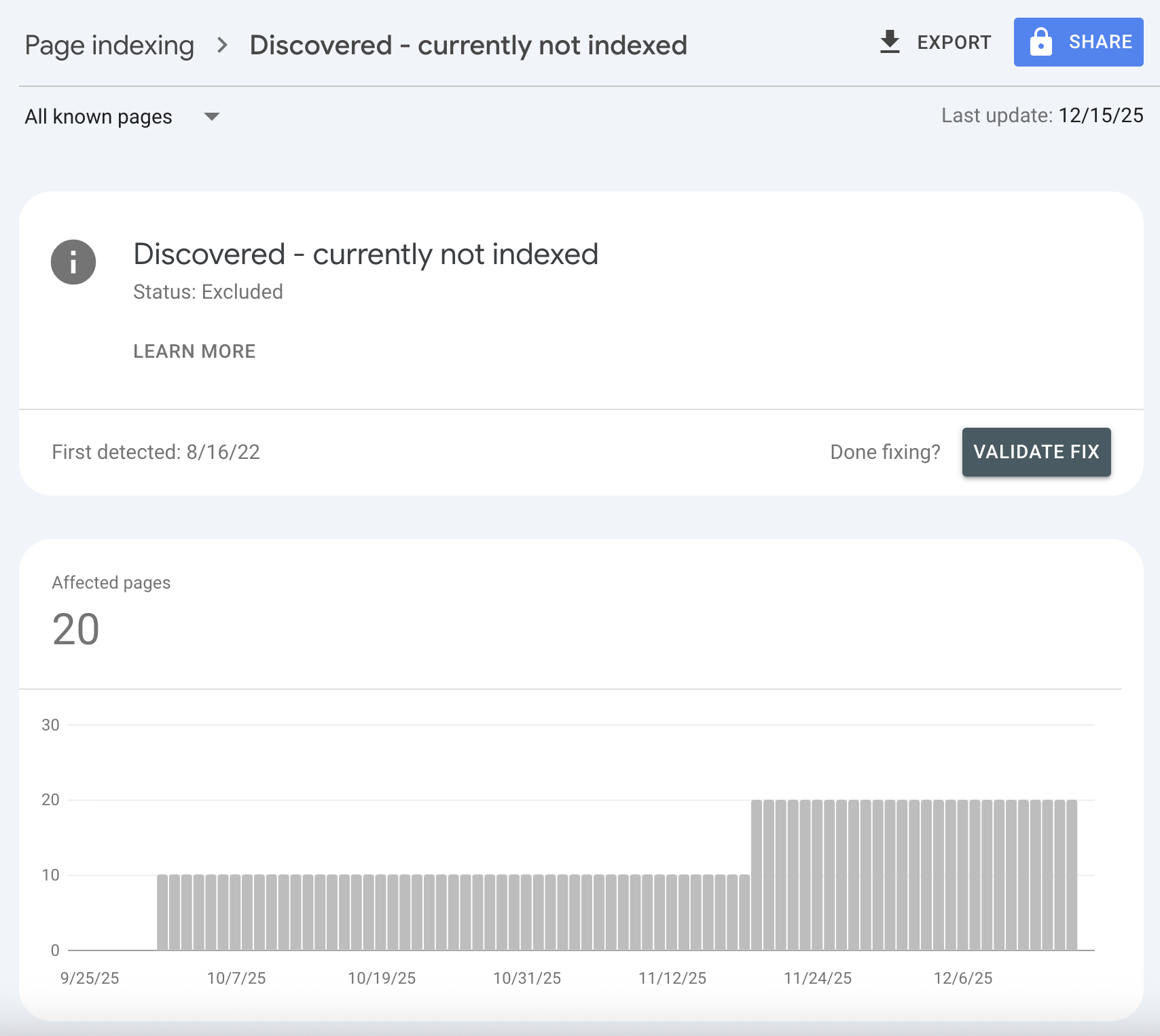

You built the templates. You generated the content. You pushed 5,000 perfectly structured pages live. Then you checked Google Search Console a week later and saw 47 indexed pages. The rest? "Discovered, currently not indexed."

This isn't a bug. It's how Google responds to programmatic SEO done wrong.

I've seen this story play out dozens of times. A startup or agency gets excited about scaling content through automation. They build a database of locations, products, or use cases. They generate thousands of pages in a weekend. They submit the sitemap and wait for the traffic to roll in.

It doesn't.

Programmatic SEO can absolutely work. Zapier, Zillow, TripAdvisor, and countless directory sites have built empires on it. But the gap between "works brilliantly" and "total failure" comes down to a few critical mistakes that most teams make in the first 30 days.

In this guide, you'll learn why bulk page launches fail, how Google decides what to index, and the step-by-step process for scaling content without triggering Google's quality filters.

Programmatic SEO isn't about publishing more pages. It's about publishing pages that deserve to exist.

What Is Programmatic SEO (And Why It's Tempting)

Programmatic SEO is the practice of creating large numbers of pages from templates and databases. Instead of manually writing each page, you build a system that generates pages automatically based on structured data.

Common programmatic SEO patterns:

| Pattern | Example | Data Source |

|---|---|---|

| Location pages | "[Service] in [City]" | City database |

| Product variations | "[Product] for [Use Case]" | Product catalog + use cases |

| Comparison pages | "[Tool A] vs [Tool B]" | Tool database |

| Directory listings | "[Business Type] in [Location]" | Business listings |

| Template tools | "[Keyword] Generator" | Keyword variations |

The appeal is obvious. Instead of writing 50 blog posts, you create one template and generate 5,000 pages. Each page targets a long-tail keyword with low competition. In theory, you capture thousands of search queries with minimal marginal effort.

Why It's So Tempting in 2026

Three forces are pushing more teams toward programmatic SEO:

1. AI content generation is cheap and fast.

Tools like GPT-5 can generate unique content for each page variation. What used to require expensive writers now costs pennies per page.

2. Long-tail keywords are less competitive.

"Plumber in Austin" has less competition than "plumber." Programmatic SEO lets you target thousands of these specific queries.

3. Successful examples are everywhere.

You can point to Yelp, Nomadlist, or countless job boards as proof it works. The model is validated.

The problem? Those successful examples took years to build authority. They didn't launch 10,000 pages on day one.

Why Bulk Page Launches Almost Always Fail

Here's the uncomfortable truth: Google is suspicious of new sites that suddenly publish thousands of pages. And that suspicion is rational.

Google's Trust Problem

From Google's perspective, a brand new domain that appears with 5,000 pages looks like:

- A spam site trying to game search results

- An AI content farm with no editorial oversight

- A scraper site aggregating content from elsewhere

Google can't manually review every page on the internet. Instead, it uses signals to decide what's worth indexing. A sudden flood of pages from an unknown domain triggers caution, not excitement.

The Crawl Budget Reality

Every site gets a limited "crawl budget," the number of pages Googlebot will crawl in a given time period. For new sites with low authority, this budget is tiny.

Crawl budget factors:

| Factor | Impact on Crawl Budget |

|---|---|

| Domain authority | Higher authority = more crawling |

| Backlink profile | More quality links = faster crawling |

| Site speed | Faster sites get crawled more |

| Content freshness | Frequently updated = more visits |

| Internal linking | Better structure = efficient crawling |

If you launch 5,000 pages but Google only crawls 50 per day, it takes 100 days just to see each page once. And that's before Google decides whether to index them.

The Quality Threshold Has Risen

Google's helpful content system and spam updates have raised the bar for what gets indexed. Pages that would have ranked in 2020 now get filtered before they even enter the index.

The filter asks: Does this page add unique value that doesn't already exist elsewhere?

For many programmatic pages, the honest answer is no. A "[Service] in [City]" page with boilerplate content and a swapped city name adds nothing that Google doesn't already have indexed a thousand times over.

A Real Programmatic SEO Failure (And What Went Wrong)

Let me walk you through a real case I observed on Reddit's BigSEO community. The details are instructive.

The Setup

A team launched a programmatic site targeting symbol and code lookups. Think: pages for every Unicode character, programming syntax, or API reference. Each page had:

- Unique content (the actual symbol/code information)

- Proper metadata

- Clean URLs

- Submitted sitemap

They launched the entire site at once: thousands of pages on a brand new domain.

What Happened

Week 1: Google crawled about 200 pages. Indexed maybe 30.

Week 2: Crawl rate increased slightly. But most pages moved to "Discovered, not indexed."

Week 3: Crawl rate dropped. Google had made a decision about the site's quality.

Week 4 and beyond: The site stalled. Thousands of pages sat in limbo. The team tried everything: more backlinks, faster hosting, re-submitting sitemaps. Nothing worked.

The Mistakes

Looking back, the team identified three critical errors:

1. All pages launched simultaneously.

No gradual rollout. No time for Google to build trust. The domain went from 0 pages to 5,000 overnight.

2. No external authority signals.

The domain was brand new with zero backlinks. Google had no reason to trust it.

3. Pages were too similar in structure.

Despite unique content, every page followed the exact same template. Google may have seen them as near-duplicates.

The Lesson

Google doesn't index pages. It indexes websites. If the website hasn't earned trust, individual page quality barely matters. You can have perfect on-page SEO and still get filtered at the site level.

How Google Decides What to Index (The Real Process)

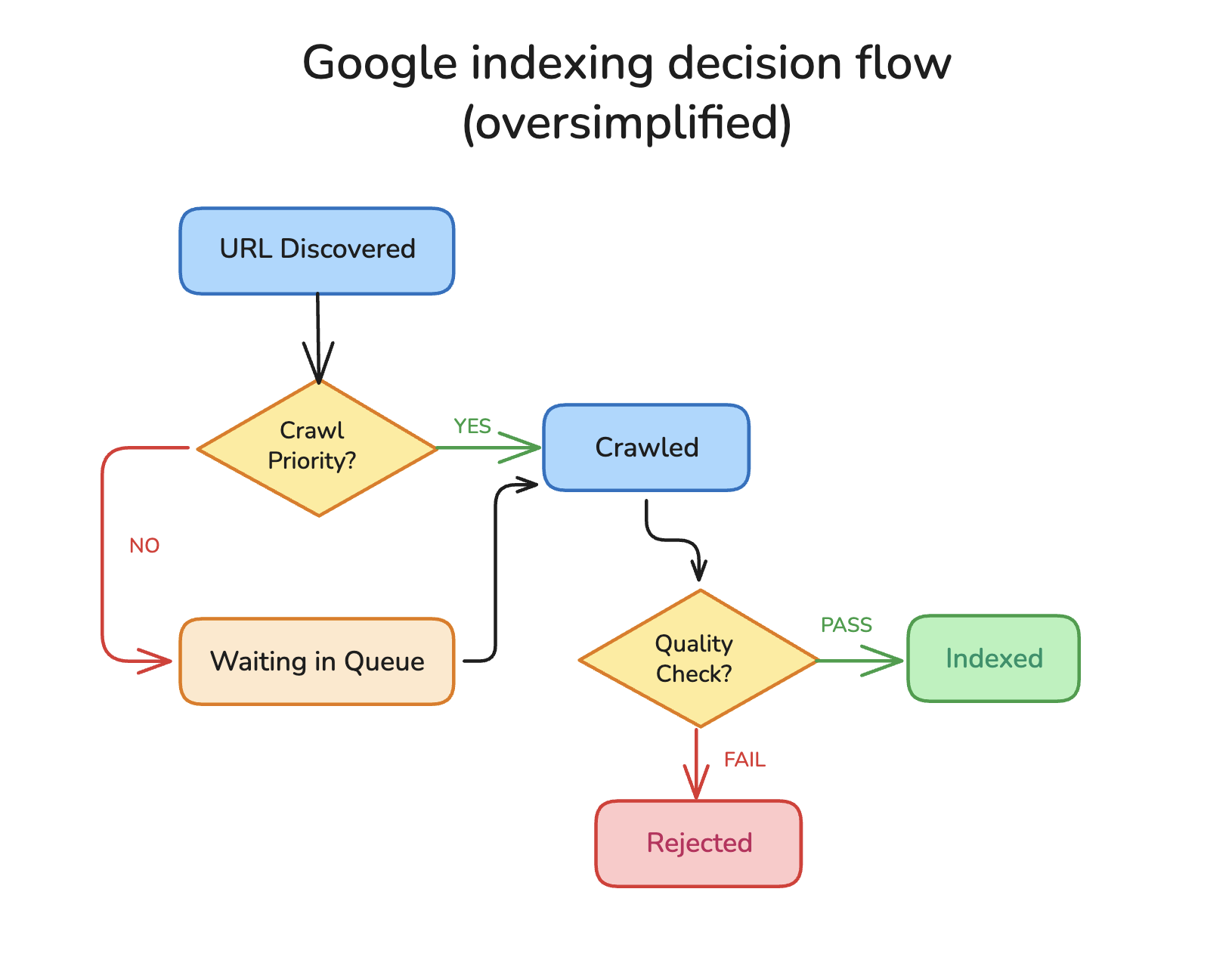

Understanding Google's indexing decision helps you avoid the traps. Here's the actual flow:

Stage 1: Discovery

Google finds your URL through:

- Sitemap submission

- Links from other pages

- Previous crawl discoveries

At this stage, your page enters the crawl queue. This is the "Discovered, not indexed" status in Search Console.

Stage 2: Crawl Priority

Google decides when to crawl based on:

- Site authority and trust

- Expected page value (based on similar pages)

- Server response time

- Crawl budget allocation

Low-priority pages might wait weeks or months to be crawled.

Stage 3: Quality Evaluation

After crawling, Google evaluates whether to index. The page must pass:

- Duplicate content checks (is this substantially similar to existing indexed content?)

- Quality thresholds (does this provide value beyond what's available?)

- Spam signals (does this look like manipulation?)

Stage 4: Indexing Decision

The page either:

- Gets indexed and enters the ranking pool

- Gets soft-rejected ("Crawled, not indexed")

- Gets filtered as low-quality

- Gets marked as duplicate/canonical

The Implication for Programmatic SEO

If Google doesn't trust your domain, it will:

1. Crawl slowly (low priority)

2. Apply stricter quality filters

3. Index a sample, then wait to see performance

This is why sites that launch gradually outperform sites that launch everything at once. Google needs time to verify that your pages provide value before it's willing to index thousands more.

How to Scale Programmatic SEO Without Getting Filtered

The teams that succeed with programmatic SEO follow a different playbook. Here's the approach that works.

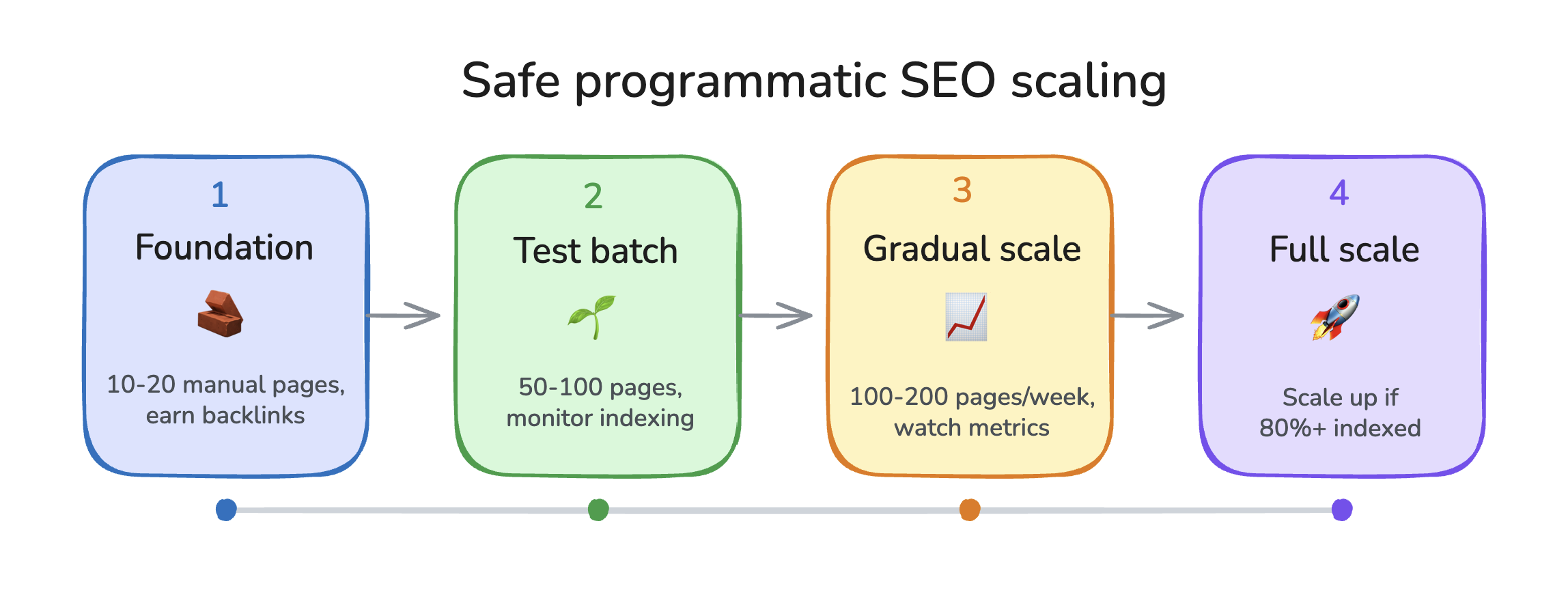

Phase 1: Start With Authority (Before Any Programmatic Content)

Before launching a single programmatic page, establish your domain's credibility:

Build a foundation of manual content.

Create 10-20 high-quality, manually written pages. Blog posts, guides, resources. Content that can earn backlinks and demonstrate expertise.

Earn backlinks to the domain.

Get featured in industry publications, create linkable assets, do outreach. You need external validation before Google will trust your programmatic pages.

Let the domain age.

A 6-month-old domain with consistent activity is treated differently than a week-old domain. Patience matters.

Phase 2: Launch a Small Batch First

When you're ready for programmatic content, don't launch everything. Start with 50-100 pages.

Why small batches work:

| Batch Size | Google's Response |

|---|---|

| 50-100 pages | Evaluates quality, indexes if valuable |

| 500-1,000 pages | Triggers caution, samples before indexing more |

| 5,000+ pages | Assumes spam until proven otherwise |

Monitor what happens with your first batch. If 80%+ get indexed within 2-3 weeks, you have a green light. If most stay in "Discovered, not indexed," you have a quality problem to solve.

Phase 3: Scale Gradually

Once your initial batch is indexed:

- Add 100-200 pages per week (not per day)

- Monitor indexing rates in Search Console

- If indexing slows, pause and diagnose

- If indexing stays healthy, gradually increase

The 10% rule: Never add more than 10% of your current indexed pages in a single week. If you have 500 indexed pages, add no more than 50.

Phase 4: Strengthen Internal Linking

Programmatic pages often have weak internal linking. Fix this by:

- Linking from manual content to programmatic pages

- Creating hub pages that link to related programmatic pages

- Adding contextual links between related programmatic pages

- Building navigation that exposes programmatic pages to crawlers

Strong internal linking helps Google discover and value your programmatic pages.

The Programmatic Page Quality Checklist

Before launching any programmatic page, it should pass every item on this checklist. If it doesn't, fix it or don't publish it.

Content Quality

- Page has substantial unique content (not just swapped variables)

- Content answers a real search query with useful information

- Page provides value beyond what's in the SERP already

- Content is accurate and factually correct

- No thin content (minimum 300-500 words of substantive text)

Technical SEO

- Clean, descriptive URL structure

- Unique title tag with target keyword

- Unique meta description

- Proper heading hierarchy (H1, H2s)

- Fast page load (under 3 seconds)

- Mobile-friendly layout

Indexability

- Page is in XML sitemap

- Page has internal links pointing to it

- No noindex tag (unless intentional)

- Canonical tag points to self (not a different page)

- Page returns 200 status code

Differentiation

- Template varies significantly between pages (not just city name swaps)

- Each page has unique data points or information

- Pages are visually distinct enough to not look like duplicates

- Content sections are customized based on page data

The honest test: Would you bookmark this page? Would you share it? Would you trust it as a source? If not, Google won't either.

How to Recover From a Failed Programmatic Launch

If you've already launched and most pages aren't indexed, here's how to recover.

Step 1: Stop Adding More Pages

Don't make it worse. Pause all new page creation until you've fixed the existing problem.

Step 2: Diagnose the Cause

Check Search Console for clues:

| Status | Likely Cause | Solution |

|---|---|---|

| "Discovered, not indexed" | Low crawl priority, quality concerns | Improve page quality, add internal links |

| "Crawled, not indexed" | Page quality below threshold | Substantially improve content (see our complete guide to fixing this status) |

| "Duplicate without canonical" | Pages too similar | Differentiate content between pages |

| "Soft 404" | Content too thin | Add substantial content |

Step 3: Improve Your Best Pages First

Don't try to fix 5,000 pages at once. Identify your 50-100 highest-potential pages and make them excellent:

- Add unique, substantial content

- Include original data or insights

- Build internal links from your strongest pages

- Earn a few external links if possible

Step 4: Request Indexing Manually

For your improved pages, use Search Console's URL Inspection tool:

- Enter the URL

- Click "Request Indexing"

- Monitor for indexing over 1-2 weeks

Don't spam this for thousands of URLs. Focus on your best pages first.

Step 5: Prune Low-Quality Pages

Sometimes the answer is fewer pages, not more. Consider:

- Removing pages with zero search demand

- Consolidating similar pages into stronger single pages

- Setting noindex on pages that aren't worth indexing

This can improve overall site quality signals and help your good pages get indexed.

For more on when to remove content, see our guide on content pruning vs. publishing.

Step 6: Wait and Monitor

Indexing changes take time. After making improvements:

- Check indexing status weekly

- Track which pages move from "not indexed" to "indexed"

- Adjust strategy based on what works

Recovery typically takes 2-3 months for significant improvement.

Tools for Monitoring Programmatic SEO

You need visibility into what's happening with thousands of pages. These tools help.

Google Search Console (Essential, Free)

The primary source of truth for indexing status.

Key reports:

- Index Coverage: Shows indexed vs. excluded pages by reason

- URL Inspection: Check individual page status

- Sitemaps: Verify sitemap submission and discovery

Log File Analysis

See exactly what Googlebot is doing on your site.

What log files reveal:

- Which pages Googlebot crawls (and how often)

- Crawl frequency trends

- Pages Googlebot ignores

- Server errors during crawling

Tools: Screaming Frog Log Analyzer, Logdy, custom scripts.

Crawl Budget Monitoring

Track how efficiently Google crawls your site.

Metrics to watch:

- Pages crawled per day

- Average response time

- Crawl request trends

Technical SEO Audit Tools

Catch issues before they cause indexing problems.

Run regular audits for:

- Broken internal links

- Duplicate content

- Missing metadata

- Slow pages

- Mobile usability issues

Regular technical SEO audits catch problems before they compound.

What Successful Programmatic SEO Looks Like

Let's look at sites that got it right.

Zapier: Integration Pages

Zapier has thousands of pages like "Connect [App A] to [App B]." Each page:

- Has unique content explaining the specific integration

- Shows real workflow examples

- Includes user reviews and use cases

- Links to related integrations

They didn't launch these all at once. They built gradually over years, adding integrations as demand grew.

Nomadlist: City Pages

Nomadlist has pages for every city digital nomads might visit. Each page:

- Contains crowdsourced, real data (cost of living, internet speed, safety)

- Is constantly updated with fresh information

- Has community engagement (reviews, Q&A)

- Offers unique value not available elsewhere

The data is genuinely unique. You can't get this information by combining other sources.

What They Have in Common

| Success Factor | Why It Matters |

|---|---|

| Unique data | Pages offer information not available elsewhere |

| Gradual growth | Scaled over years, not weeks |

| User engagement | Real users interact with and update content |

| Strong domain | Built authority before scaling programmatic content |

| Genuine value | Each page answers a real question with useful info |

Frequently Asked Questions

What is programmatic SEO?

Programmatic SEO is creating large numbers of web pages from templates and databases. Instead of manually writing each page, you build a system that generates pages automatically based on structured data. Common examples include location pages, comparison pages, and directory listings.

Is programmatic SEO worth it?

It can be highly valuable if done correctly. Sites like Zapier, Zillow, and TripAdvisor have built significant organic traffic through programmatic content. However, it requires patience, quality standards, and gradual scaling. Rushing the process or publishing low-quality pages typically results in wasted effort and potential site-wide quality issues.

How does programmatic SEO work technically?

You create a page template with variable sections, then populate those sections with data from a database. For example, a "[City] Weather" template might pull temperature, humidity, and forecast data for each city in your database, generating thousands of unique pages from one template.

How do you measure programmatic SEO success?

Track indexing rate (percentage of pages indexed), organic traffic per page, and revenue or conversions from programmatic pages. In Search Console, monitor the ratio of "indexed" to "excluded" pages. Aim for 80%+ indexing rate on programmatic content.

Why are my programmatic pages not getting indexed?

Common causes: launching too many pages at once (triggers quality filters), pages too similar to each other (duplicate content), thin content that doesn't add value, weak internal linking, or low site authority. Check Search Console's Index Coverage report for specific exclusion reasons.

How long does it take for programmatic pages to get indexed?

For established, trusted domains: 1-4 weeks for most pages. For new domains: 2-3 months for meaningful indexing progress. If pages remain unindexed after 3 months, there's likely a quality or technical issue to address.

The Real Secret to Programmatic SEO

Programmatic SEO isn't a hack. It's not a shortcut. It's not a way to skip the hard work of building a real website.

It's a scaling strategy that only works after you've done the hard work first.

The teams that fail try to use programmatic SEO as a substitute for authority. They think: "We don't have traffic or backlinks yet, but if we publish 10,000 pages, surely some will rank."

They don't.

The teams that succeed use programmatic SEO as an amplifier. They build a foundation first. They earn trust. They prove they can create valuable content. Then they scale what works.

Here's your action plan:

If you haven't launched yet: Build 10-20 manual pages first. Earn some backlinks. Wait 3-6 months before going programmatic.

If you've launched and failed: Stop adding pages. Improve your best 50-100. Build internal links. Be patient.

If you're scaling successfully: Keep the pace gradual. Monitor indexing rates. Never add more than 10% of indexed pages per week.

Programmatic SEO works. But only for sites that have earned the right to scale.

Don't publish pages that don't deserve to exist. Publish fewer pages that deserve to rank.